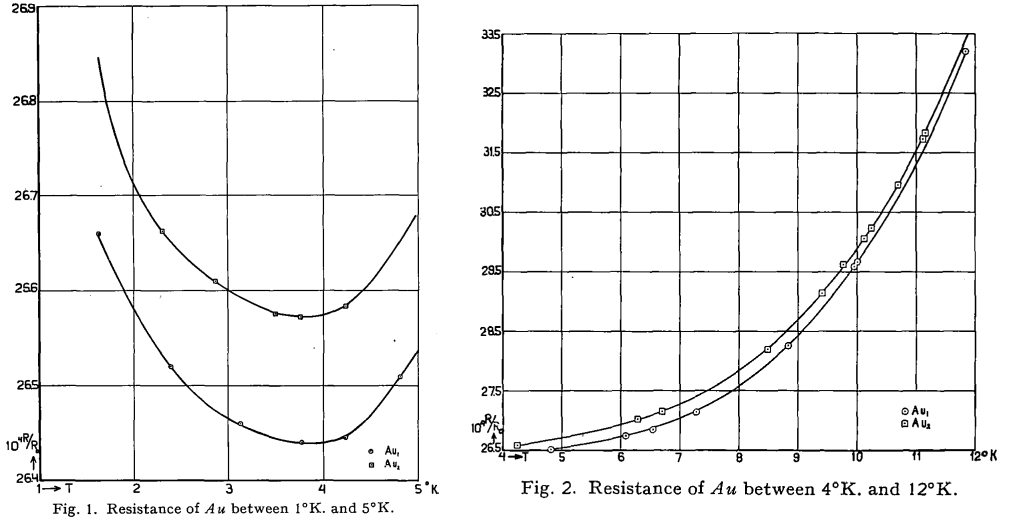

Ferromagnetism is a state of matter whose existence has been known for millennia (Fe3O4 supposedly was the first known permanent magnet, but is actually a ferrimagnet). Coincidentally, it was also the material that took center stage in Expt 8 in this series of posts. Antiferromagnetism is, in some sense, “hidden order”. Although predicted in 1932 by Louis Neel, it was not apparent how to convincingly demonstrate that spins could align antiparallel to one another when the magnetic moments perfectly cancel. There is no net magnetization to speak of or measure!

Suggestions of a such a state were hinted at through the observation of a phase transition in magnetic susceptibility measurements, but the origin of such a phase transition was unclear. In 1949, Clifford Shull and J. Stuart Smart definitively demonstrated that antiferromagnetism was present in MnO. In their experiment, which used the new technique of neutron diffraction, magnetic peaks suddenly showed up below , which indicated a doubling of the unit cell. Thus, the question of “hidden order” was finally settled — the evidence for antiferromagnetism was almost indisputable! The original paper, surprisingly and explicitly, states that it was Smart’s idea to use neutron diffraction to detect antiferromagnetism. Below is the original plot from MnO, taken from here, showing at least three peaks corresponding to the antiferromagnetism: